Duke Farms mobile app

Duke Farms, Samadhi Games, June 2019

Summary

Duke Farms, a 1,000+ acre nature and research preserve in New Jersey, receives 240,000 visitors each year. The expansive property lacks clear wayfinding signage and there is a lot happening on any given day. I worked with a small team to create an app to help visitors navigate the property and easily stay informed of events and educational offerings.

Keywords

Mobile App ● Nature Preserve ● Way-finding ● EdTech

Context

A few key points

01

Project overview

I worked with the developer and the product owner to design and build a mobile app that could help visitors navigate the 1,000+ acre property, stay up to date on events and activities, and provide easier access to educational information about the sites. To keep cost and time down, the app would be based on the core infrastructure of The Highline app, a previous app built by the developer.

03

Stakeholders

Stakeholders included the product owner (the programs manager at Duke Farms), other leadership at the organization, Duke Farms visitors and patrons, the developer, and myself.

05

The team

-

William Wilson, Product Owner

-

Marc DeLegge, Developer

-

Vanessa Sanchez, UX Designer

02

Necessity

Duke Farms, a 1,000+ acre nature and research preserve in New Jersey, needed an app that would help their 240,000 yearly visitors navigate the property and easily stay informed of events and educational offerings. The app needed to be available on iOS and Android platforms within 8 months of the initial inquiry, and 5 months after project kick-off.

04

My role

As the UX designer, I conducted observational research and gathered requirements from business stakeholders. I led information architecture, UI design, and content gathering for the app and app store.

06

Skills used

-

1x1 Interviews

-

Observational Research

-

Information Architecture

-

UX Strategy

-

UI Design

-

Asset Creation

-

Sketch + InVision

Timeline

8 Months

Problem

What did we uncover about the problem space?

What problem does this project address?

-

There is an increasing presence of virtual AI-led and AI-analyzed interviews in hiring processes. It is not uncommon for job applicants to go through several automated phases in the job application process before their application materials are viewed by a human.

-

Automated phases preceding human interaction can include algorithmically targeted advertisement of a job position, completing an online application, passing a resume through an Applicant Tracking System (ATS), completing an online technical assessment, and undergoing a one-way interview through video or chat.

What is the stakeholder impact?

The impact to stakeholders include:

-

Job applicants who don't fit the benchmark data may experience encoded bias at scale. They have limited power and agency to alter the practices of hiring companies or the development of EAI tools other than contributing to the benchmark data, if enabled to. However, for people with disabilities, disclosure of disability status is optional (Americans with Disabilities Act) and many cognitive disabilities present in unique ways to every individual, making it difficult for neural nets to identify useful patterns. Many elderly lack the technical knowledge needed to intervene, or access to interventional opportunities.

What have been specific pain points of stakeholders?

-

For job applicants, pain points include unfair assessment, experiencing encoded bias at scale, lack of human feedback about interview performance, increased stress particularly on less experienced candidates.

-

For hiring companies, pain points include the need for streamlined hiring processes during growth, understaffed HR departments, lack of technical knowledge (for many), unintended discriminatory practices, missing out on qualified applicants, and lack of diversity in their workforce.

What solutions already exist?

-

There are several issues with using facial recognition as a candidate behavioral diagnostic tool, including well-researched issues of such systems being able to function accurately on users with darker skin tones (Perkowitz, “The Bias in the Machine: Facial Recognition Technology and Racial Disparities.”).

-

Recruiters generally do not perceive AI-enabled software as a threat, but as another tool that can simplify the search process, which can be an advantage in a highly competitive hiring space. (Li et al., 2021).

Opportunity

What opportunity did we identify from the problem space?

Our objectives for users

-

Improve awareness of the increasing prevalence of AI in hiring processes

-

Provide better understanding of how AI works in this context

-

Inform awareness of how this kind of AI may read their facial expressions

-

Enable them to identify what kind of visual feedback they find most useful

-

Invite them to express their opinions about the use of AI in hiring practices

-

Propose the option of preparing for real future job interviews using a similar kind of AI facial analysis tool

Our objectives for the team

-

To learn how emotion-tracking/EAI makes participants feel

-

To learn what information participants want to see in their EAI results

-

To learn what is the best visualization of emotion-tracking reports

-

To devise a feasible experiment that we could execute within the 3-month time constraint and produce meaningful results through a methodical process from which we could extract insights of value to the field of human-AI interaction.

Milestones

Feb 9, 2023 - Submit project proposal document for approval by professor

Feb 20, 2023 - Complete IRB training for human subjects research

Mar 6, 2023 - Present topic and detailed research plan for full approval from professor

Apr 10, 2023 - Complete all interviews with participants

Apr 17, 2023 - Final presentation

Apr 24, 2023 - Paper submission

What were our metrics of success?

-

Including at least 15 citations from related works to support our understanding of the problem space, our goals, and statements in our paper

-

Logical connection between each phase of our study

-

Identifying and mitigating risk to participants according to IRB standards

-

Recruiting 6 to 12 participants

-

Proof of thorough and logical methodology that demonstrates an equitable approach and clean data

-

Completeness and quality of deliverables

-

Meeting milestones

-

Final grade

Design

What did we create and how did we get there?

Ideation and Iteration

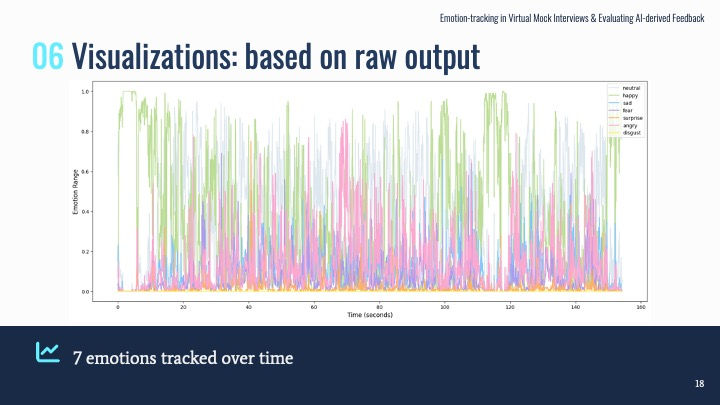

We knew we wanted to generate different kinds of visualizations, but we also wanted to think through how we would present those visualizations. So, we explored a few ideas on what kind of information we could present to participants, the structure, and the interface .

Prototype

After some team feedback and seeing the data visualizations we would be using, I generated an interactive prototype of a customized report UI where we could embed the data visualizations, the participant's mock interview clips, a case study summary (HireVue 2020) and some follow-up questions.

Facial Analysis Testing

Before running the mock interview sessions with participants, we conducted test analysis on video samples of ourselves to make sure the EAI software worked correctly and to collect sample data to work out how we would approach the data visualizations.

Design Decisions

-

Provide participants with data visualizations on their individual EAI readings overall and how their overall readings compared against the rest of the participants' in aggregate; This offers high-level points of comparison.

-

Show data visualizations in various formats of individual responses beside the video clip of that response for comparison.

-

Simplify the data visualizations from 7 emotions to 3 emotions (positive, negative, neutral) to create a visual that is easier to read.

-

Eliminate the CSV output option; The average person would not find value in this.

Research

How did we conduct research and what was the value?

Literary Research

Literary research was led by Silvia DalBen, PhD candidate. This step was foundational to inform the study for several reasons. Our research helped us understand what prior work had been done. We were able to fill a gap while building on top of existing work.

Research Ethics

Research ethics was led by myself. Three out of four team members completed the Institutional Review Board (IRB) training for social and behavioral research on human subjects. We learned about code of ethics, federal regulations, informed consent, privacy, and confidentiality. Based on those standards, we identified possible risks to our participants during the planning stage:

-

Sharing of personally identifiable information

-

Video recording of participants

-

Sharing of personal experiences / feeling vulnerable

-

Intentionally causing participants to experience anxiety during interview

-

Possible feelings of anxiety during AI feedback portion

Screening for recruitment

We used a screener survey with questions designed to obtain a relevant and equitable sampling to meet our participant quota. We had 12 questions covering interest/availability, demographic information, job seeking status and familiarity with AI technology. Out of 32 respondents, 5 did not pass the screener, leaving us with 27 viable respondents.

Data cleaning and selections

-

We reconciled for one survey question that changed after the survey was released.

-

We added a column to summarize "two or more race/ethnicity" (Yes/No)

-

We prioritized sampling by 4 attributes:

-

Age (because we had very few people age 35 and older)

-

Skin tone (because this was a major point of interest in the study)

-

Gender identification (because we wanted an even distribution)

-

Familiarity with AI (because we had a good range)

-

-

We shortlisted 12 participants with 4 alternates

-

1 backed out

-

2 no responses

-

9 participants total were interviewed

-

9 remote mock 1x1 interviews on Zoom

-

Team members scheduled Zoom interviews over 1 week

-

Verbal informed consent to record was obtained at start of sessions

-

Interviewers followed a script and asked 3 behavioral questions designed to elicit neutrality (baseline), confidence, and stress:

-

Can you tell me about yourself?

-

Can you tell me about a time you went above and beyond?

-

Can you tell me about a time you overcame a team conflict or challenge?

-

-

We ended with 3 post-interview questions:

-

(List the 7 emotions) What emotions do you think you displayed the most?

-

How do you feel about AI analyzing your performance?

-

Have you ever used a mock video interview tool to practice? Which one?

-

Facial Expression Recognition (FER)

Kyle Soares led technology research and development. He leveraged an open source Python library used for sentiment analysis of images and videos (Source: https://pypi.org/project/fer/):

-

Multi-cascade convolution neural network (MTCNN) model

-

Dataset - FER 2013, Pierre Luc Carrier and Aaron Courville, ICML: Challenges in Representation Learning

-

Analyzes videos per frame for 7 emotions: Neutral, Happy, Sad, Fear, Surprise, Anger, Disgust

Data Pre-processing and Visualization of Results

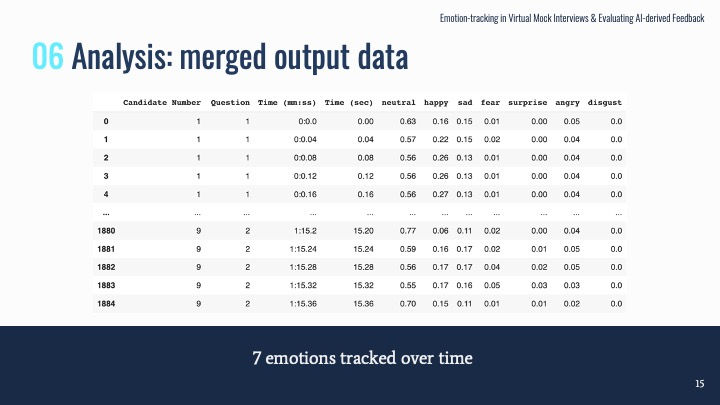

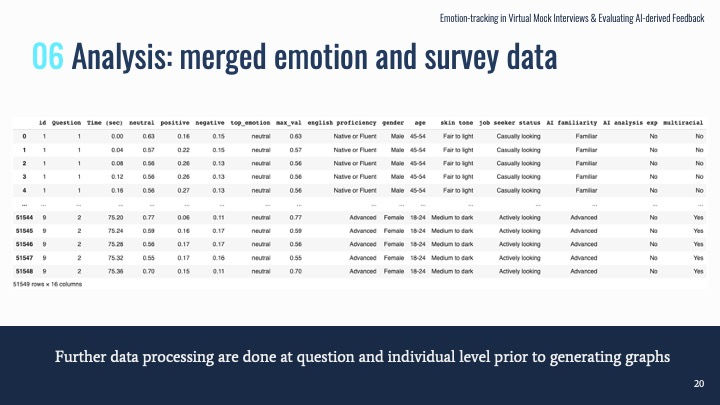

Dhanny Indrakusuma led data pre-processing and visualization. The following summarizes her process:

-

Analyzed the output data from the interviews (CSV format with timestamps and emotion analysis output)

-

Merged model's output data with information from screener survey

-

Generated insights by looking at how each participant's emotion differs when analyzing emotions for each question and demographic comparison

9 Follow-Up Interviews and Participant Insights

We conducted follow-up interviews with participants to get their reactions to individualized EAI reports.

-

Sentiment: Overall positive response to reports

-

Ground Truth: 5/9 participants agreed with the EAI analysis while many were surprised

-

Concerns: Most participants were concerned about usage of EAI analysis in future interviews, especially if it makes final hiring decision

-

Satisfaction: 7/9 participants would use EAI tool again

Team Insights

We recognized some factors that may have impacted our results:

-

Limited access to controlled interview environments

-

Results might be affected by camera angle or lighting

-

Difficult to manufacture realistic behaviors in participants for "fake interview"

-

Participants may have had different reactions based on who was interviewing them; although our team members had scripts to follow and were paired with strangers, some of us deviated from the script, some of us chose to be more personable, and some of us were intentionally flat.

Impact on Participants

Participants expressed a range of attitudes and levels of curiosity about the EAI that was analyzing them:

-

"[The AI] made me curious and made me wonder why it's answering the way it is."

-

"I feel I have to exaggerate facial expressions to convey positive emotions. It's not natural."

-

"I wasn't really thinking about it."

-

"I'd rather start my own business than use AI to become someone I'm not."

-

"It's not just my expression that matters; what about my voice, my body language, etc.?"

Future Work

Possible future work to be done as follow-up to this study include:

-

Emotional analysis as prep tool: Can emotional analysis be used with NLP analysis for better interview preparation?

-

Bias study: Who is most affected by AI emotional analysis in interviews?

-

Connect emotions to performance: Which emotions are best for job offers?

Results

How did things turn out and what did we learn?

Performance Against Objectives

Our team not only met all the objectives we set for ourselves--we went above and beyond, leveraging our unique skill sets to produce a set of deliverables none of us could have done on our own.

Lessons Learned

The primary lesson I learned is that it pays off to develop a detailed research plan and methodology at the outset of a project. Some other lessons include:

-

Reality will force plans to shift, so be flexible and have back-up plans

-

Communication is challenging but important; try to meet people where they're at

-

In a good team, everyone wants a chance to contribute

-

Document everything

Areas for Future Improvement

Overall, our team collaborated successfully, hit milestones, and had a successful outcome. Areas for future improvement include:

-

Alignment on all interview protocols

-

Adherence by all team members to interview protocols to protect participant data

-

Alignment on goals concerning ethics and IRB training

-

Team communication